The JPEG Compression

Author: Alban Fichet alban.fichet@gmx.fr

License: BSD 3-Clause License https://opensource.org/licenses/BSD-3-Clause

Last modified: Nov. 1st 2021

This code is for educational purpose, not meant to be efficient or clever.

Introduction

The JPEG (the Joint Photographic Experts Group) version 1 is a widely used image compression method for pictures. This notebook explains how it works and the different steps of the compression algorithm.

This lossy compression scheme exploits the weakness of human vision to preserve only the most relevant information in a picture. It is mainly based on those two assumptions:

- We are more sensitive to luminance variation than to colour variation

- We are less sensitive to high frequencies than lower frequencies

import numpy as np

import scipy.fftpack

from PIL import Image

import matplotlib.pyplot as plt

from IPython.display import display, Markdown, Latex, HTML

import scipy.ndimage

plt.rcParams['figure.figsize'] = (20.0, 10.0)

plt.rcParams['figure.facecolor'] = 'white'

# Helper functions to nicely display a matrix

def display_matrix(m):

prt = '<table style="background:white; boder-style:solid; border:1px">'

for i in range(m.shape[0]):

prt += ' <tr>'

for j in range(m.shape[1]):

prt += ' <td>{}</td>'.format(m[i, j])

prt += ' </tr>'

prt += '</table>'

display(HTML(prt))

def display_matrix_float(m):

prt = '<table style="background:white; boder-style:solid; border:1px">'

for i in range(m.shape[0]):

prt += ' <tr>'

for j in range(m.shape[1]):

prt += ' <td>{0:.2f}</td>'.format(m[i, j])

prt += ' </tr>'

prt += '</table>'

display(HTML(prt))

We load our original image:

img = Image.open('sample.png')

image = np.asarray(img)

plt.title('Original image')

plt.imshow(image)

plt.show()

Compression

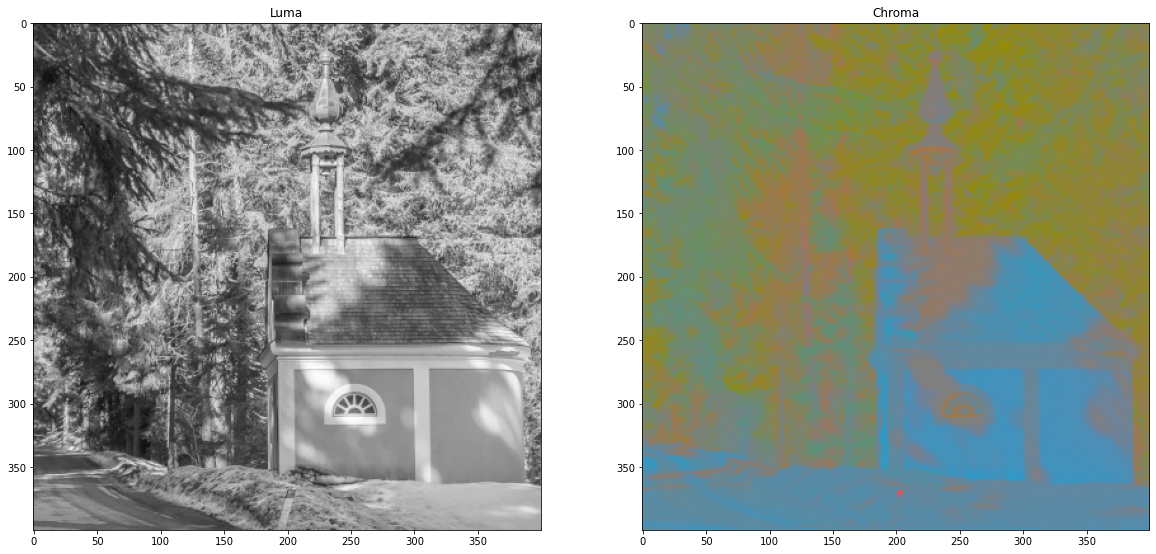

Sepration of Luma Y’ and Chroma CbCr

The separation of luma and chroma channels are based on Rec. 601. Chroma channel are translated to use the full unsigned 8bit range $[0; 255]$

\[\begin{align} Y' &=& &0.299 & \cdot R' &+ &0.587 & \cdot G' &+ &0.114 & \cdot B'\\ C_B &=& - &0.168736 & \cdot R' &- &0.331264 & \cdot G' &+ &0.5 & \cdot B' &+ 128\\ C_R &=& &0.5 & \cdot R' &- &0.418688 & \cdot G' &- &0.081312 & \cdot B' &+ 128 \end{align}\]def rgb_to_ycbcr_jpeg(rgb):

y = 0.299 * rgb[0] + 0.587 * rgb[1] + 0.114 * rgb[2]

cb = -0.168736 * rgb[0] - 0.331264 * rgb[1] + 0.5 * rgb[2] + 128

cr = 0.5 * rgb[0] - 0.418688 * rgb[1] - 0.081312 * rgb[2] + 128

return [int(min(max(y, 0), 255)), int(min(max(cb, 0), 255)), int(min(max(cr, 0), 255))]

def image_rgb_to_ycbcr_jpeg(image):

image_r, image_g, image_b = image[:,:,0], image[:,:,1], image[:,:,2]

image_y = np.clip(0.299 * image_r + 0.587 * image_g + 0.114 * image_b, 0, 255)

image_cb = np.clip(-0.168736* image_r - 0.331264 * image_g + 0.5 * image_b + 128, 0, 255)

image_cr = np.clip(0.5 * image_r - 0.418688 * image_g - 0.081312 * image_b + 128, 0, 255)

return np.array(np.dstack((image_y, image_cb, image_cr)), dtype=np.uint8)

# Will be described later...

def ycbcr_to_rgb_jpeg(ycbcr):

r = ycbcr[0] + 1.402 * (ycbcr[2] - 128)

g = ycbcr[0] - 0.344136 * (ycbcr[1] - 128) - 0.714136 * (ycbcr[2] - 128)

b = ycbcr[0] + 1.772 * (ycbcr[1] - 128)

return [int(min(max(r, 0), 255)), int(min(max(g, 0), 255)), int(min(max(b, 0), 255))]

def image_ycbcr_to_rgb_jpeg(image):

image_y, image_cb, image_cr = image[:,:,0], image[:,:,1], image[:,:,2]

image_r = np.clip(image_y + 1.402 * (image_cr - 128), 0, 255)

image_g = np.clip(image_y - 0.344136 * (image_cb - 128) - 0.714136 * (image_cr - 128), 0, 255)

image_b = np.clip(image_y + 1.772 * (image_cb - 128) , 0, 255)

return np.array(np.dstack((image_r, image_g, image_b)), dtype=np.uint8)

Example:

We separate the original RGB image into one Y’ (luma) image (black and white) and one two channels CbCr (chroma) image. The two images are going to follow the same pipeline but with different parameters.

disp_cbcr = np.zeros((*image.shape[0:2], 3), dtype=np.int32)

image_ycbcr = image_rgb_to_ycbcr_jpeg(image)

# Just to display the chroma channel

disp_cbcr = image_ycbcr_to_rgb_jpeg(

np.dstack((128 * np.ones(image.shape[0:2]),

image_ycbcr[:, :, 1],

image_ycbcr[:, :, 2])

)

)

plt.subplot(121)

plt.title('Luma')

plt.imshow(image_ycbcr[:,:, 0], cmap='gray', vmin=0, vmax=255)

plt.subplot(122)

plt.title('Chroma')

plt.imshow(disp_cbcr)

plt.show()

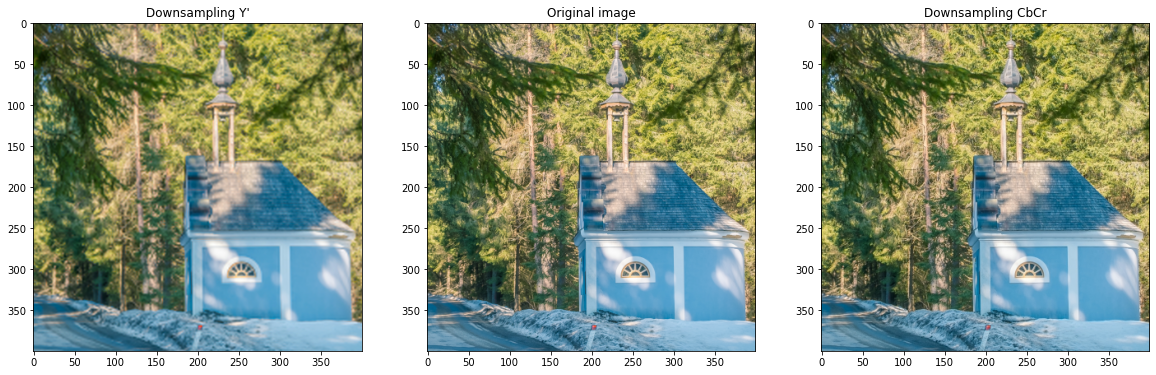

Lossy step: downsampling chroma

The chroma is less perceptually important than the luma. JPEG exploits this fact by reducing the resolution of the chroma channels while keeping the original resolution of the luma.

from skimage.measure import block_reduce

# Downsample each channel (Y is just for demo)

image_degraded_y = block_reduce(image_ycbcr[:, :, 0], block_size=(2, 2), func=np.mean)

image_degraded_cb = block_reduce(image_ycbcr[:, :, 1], block_size=(2, 2), func=np.mean)

image_degraded_cr = block_reduce(image_ycbcr[:, :, 2], block_size=(2, 2), func=np.mean)

# Rescale

display_degraded_y = scipy.ndimage.zoom(image_degraded_y , 2)

display_degraded_cb = scipy.ndimage.zoom(image_degraded_cb, 2)

display_degraded_cr = scipy.ndimage.zoom(image_degraded_cr, 2)

disp_degraded_y = image_ycbcr_to_rgb_jpeg(

np.dstack((display_degraded_y,

image_ycbcr[:, :, 1],

image_ycbcr[:, :, 2])

)

)

disp_degraded_cbcr = image_ycbcr_to_rgb_jpeg(

np.dstack((image_ycbcr[:, :, 0],

display_degraded_cb,

display_degraded_cr)

)

)

plt.subplot(131)

plt.title('Downsampling Y\'')

plt.imshow(disp_degraded_y)

plt.subplot(132)

plt.title('Original image')

plt.imshow(image)

plt.subplot(133)

plt.title('Downsampling CbCr')

plt.imshow(disp_degraded_cbcr)

plt.show()

Frequency separation (Discrete Cosine Transform - DCT)

After this step, the image is processed on each 8x8 image block. The process is the same for the luma and the chroma channels. Only the quantization matrices (see below) change between luma and chroma.

So, let’s consider the first 8 by 8 image block:

x, y = 240, 295

luma_block = image_ycbcr[y:y+8, x:x+8, 0]

display(Markdown('Original image block'))

display_matrix(luma_block)

# plt.imshow(luma_block, cmap='gray', vmin=0, vmax=255)

# plt.show()

Original image block

| 240 | 186 | 169 | 180 | 200 | 213 | 146 | 97 |

| 183 | 158 | 184 | 196 | 154 | 209 | 197 | 107 |

| 167 | 185 | 192 | 118 | 103 | 176 | 210 | 163 |

| 191 | 197 | 127 | 114 | 112 | 116 | 197 | 208 |

| 202 | 121 | 93 | 96 | 104 | 111 | 161 | 208 |

| 161 | 123 | 104 | 95 | 90 | 89 | 113 | 197 |

| 225 | 185 | 130 | 128 | 102 | 95 | 92 | 146 |

| 197 | 217 | 217 | 166 | 134 | 133 | 102 | 113 |

To center values at 0 we subtract -128 to each of the pixel values.

shifted_luma_block = luma_block - 128*np.ones((8, 8), dtype=np.int8)

display(Markdown('Shifted image block'))

display_matrix(shifted_luma_block)

Shifted image block

| 112 | 58 | 41 | 52 | 72 | 85 | 18 | -31 |

| 55 | 30 | 56 | 68 | 26 | 81 | 69 | -21 |

| 39 | 57 | 64 | -10 | -25 | 48 | 82 | 35 |

| 63 | 69 | -1 | -14 | -16 | -12 | 69 | 80 |

| 74 | -7 | -35 | -32 | -24 | -17 | 33 | 80 |

| 33 | -5 | -24 | -33 | -38 | -39 | -15 | 69 |

| 97 | 57 | 2 | 0 | -26 | -33 | -36 | 18 |

| 69 | 89 | 89 | 38 | 6 | 5 | -26 | -15 |

We perform a Discrete Cosine Transform (DCT):

\[F(u, v) = \frac{1}{4} \cdot C(u) \cdot C(v) \left[ \sum_{x=0}^{7} \sum_{y=0}^{7} f(x, y) \cdot \cos \frac{(2x + 1)u\pi}{16} \cdot \cos \frac{(2y + 1)v\pi}{16} \right]\]# Detailed implementation (not optimized and slow:

def dct2(img):

img_dct = np.zeros(img.shape)

for u in range(8):

C_u = 1 if u > 0 else 1/np.sqrt(2)

for v in range(8):

C_v = 1 if v > 0 else 1/np.sqrt(2)

for x in range(8):

for y in range(8):

img_dct[v, u] += (

img[y, x] *

np.cos((2*x + 1) * u * np.pi / 16) *

np.cos((2*y + 1) * v * np.pi / 16)

)

img_dct[v, u] *= C_u * C_v / 4

return img_dct

# Using Scipy built-in functions, much more efficient

# from: https://inst.eecs.berkeley.edu/~ee123/sp16/Sections/JPEG_DCT_Demo.html

def dct2(a):

return scipy.fftpack.dct(

scipy.fftpack.dct(a, axis=0, norm='ortho'),

axis=1, norm='ortho')

Now, we perform the Discrete Cosine Transform on the shifted pixel values:

dct_luma_block = dct2(shifted_luma_block)

display(Markdown('Discrete Fourier Transform'))

display_matrix_float(dct_luma_block)

Discrete Fourier Transform

| 206.63 | 89.84 | 132.14 | 20.69 | -6.38 | 21.78 | 10.34 | -9.27 |

| 107.56 | -47.00 | -68.92 | 70.88 | -56.38 | 43.75 | -4.70 | 2.99 |

| 71.36 | 123.74 | -135.99 | 39.27 | -21.42 | 0.37 | 26.97 | -15.16 |

| -63.09 | -19.58 | 15.91 | 40.62 | 86.13 | -9.98 | 12.84 | -3.90 |

| 36.38 | 21.60 | 2.21 | 18.73 | 20.37 | -17.55 | -11.33 | 8.37 |

| 1.71 | 32.17 | 41.69 | 3.39 | 10.75 | -29.78 | -19.34 | -3.27 |

| -9.67 | -13.28 | -0.28 | -20.52 | -25.90 | -19.34 | 17.99 | 11.69 |

| -1.26 | 8.35 | 15.80 | 19.94 | -1.87 | 5.10 | 16.99 | 5.16 |

Lossy step: quantization

The JPEG proposes two “standard” quantization tables, one for luma and the other for chroma. Note that some manufacturers use different quantization tables, sometimes computed on the fly on a per image basis. This discrepancy of quantization tables is used to detect edited photographs sometimes.

We are using the two proposed quantization tables from JPEG. You can find additional tables here: https://impulseadventure.com/photo/jpeg-quantization.html.

A quality parameter $Q \in ]0; 100]$ scales the quantization tables. This quality parameter defines a scaling factor:

\[S = \begin{cases} \frac{500}{Q} & \text{if } Q < 50 \\ 200 - 2\cdot Q & \text{otherwise} \end{cases}\]This scaling factor modifies the base quantization tables $T_b$ (either luma or chroma base table):

\[T_s[i] = \left\lfloor\frac{S\cdot T_b[i] + 50}{100} \right\rfloor\]In the final quantization table, the higher a number is, the lower the quality will be: the image is divided by each coefficient of the quantization table and will have more chance to round to 0 with an higher values in the quantization table.

# JPEG standard base quantization tables

base_quantization_luma = np.array(

[

[16, 11, 10, 16, 24, 40, 51, 61],

[12, 12, 14, 19, 26, 58, 60, 55],

[14, 13, 16, 24, 40, 57, 69, 56],

[14, 17, 22, 29, 51, 87, 80, 62],

[18, 22, 37, 56, 68, 109, 103, 77],

[24, 35, 55, 64, 81, 104, 113, 92],

[49, 64, 78, 87, 103, 121, 120, 101],

[72, 92, 95, 98, 112, 100, 103, 99]

])

base_quantization_chroma = np.array(

[

[17, 18, 24, 47, 99, 99, 99, 99],

[18, 21, 26, 66, 99, 99, 99, 99],

[24, 26, 56, 99, 99, 99, 99, 99],

[47, 66, 99, 99, 99, 99, 99, 99],

[99, 99, 99, 99, 99, 99, 99, 99],

[99, 99, 99, 99, 99, 99, 99, 99],

[99, 99, 99, 99, 99, 99, 99, 99],

[99, 99, 99, 99, 99, 99, 99, 99]

])

# Scaling function

def get_scaling(quality):

if quality < 50:

return 500 / quality

else:

return 200 - 2 * quality

# Derived quantization tables based on quality parameter

def get_quantization_table_luma(quality):

return np.array(np.floor((base_quantization_luma * get_scaling(quality) + 50) / 100),

dtype=np.uint8)

def get_quantization_table_chroma(quality):

return np.array(np.floor((base_quantization_chroma * get_scaling(quality) + 50) / 100),

dtype=np.uint8)

quality = 50

quantization_luma = get_quantization_table_luma(quality)

quantization_chroma = get_quantization_table_chroma(quality)

display(Markdown('Luma quantization table'))

display_matrix(quantization_luma)

display(Markdown('Chroma quantization table'))

display_matrix(quantization_chroma)

Luma quantization table

| 16 | 11 | 10 | 16 | 24 | 40 | 51 | 61 |

| 12 | 12 | 14 | 19 | 26 | 58 | 60 | 55 |

| 14 | 13 | 16 | 24 | 40 | 57 | 69 | 56 |

| 14 | 17 | 22 | 29 | 51 | 87 | 80 | 62 |

| 18 | 22 | 37 | 56 | 68 | 109 | 103 | 77 |

| 24 | 35 | 55 | 64 | 81 | 104 | 113 | 92 |

| 49 | 64 | 78 | 87 | 103 | 121 | 120 | 101 |

| 72 | 92 | 95 | 98 | 112 | 100 | 103 | 99 |

Chroma quantization table

| 17 | 18 | 24 | 47 | 99 | 99 | 99 | 99 |

| 18 | 21 | 26 | 66 | 99 | 99 | 99 | 99 |

| 24 | 26 | 56 | 99 | 99 | 99 | 99 | 99 |

| 47 | 66 | 99 | 99 | 99 | 99 | 99 | 99 |

| 99 | 99 | 99 | 99 | 99 | 99 | 99 | 99 |

| 99 | 99 | 99 | 99 | 99 | 99 | 99 | 99 |

| 99 | 99 | 99 | 99 | 99 | 99 | 99 | 99 |

| 99 | 99 | 99 | 99 | 99 | 99 | 99 | 99 |

We use the quantization table to divide the DCT coefficients. Depending on each quantization value, this will reduce the resolution of the DCT coefficients.

After this stage, many of the high frequency values are set to zero.

quantized_luma_block = np.array(dct_luma_block / quantization_luma, dtype=np.int16)

display(Markdown('Quantitized luma'))

display_matrix(quantized_luma_block)

Quantitized luma

| 12 | 8 | 13 | 1 | 0 | 0 | 0 | 0 |

| 8 | -3 | -4 | 3 | -2 | 0 | 0 | 0 |

| 5 | 9 | -8 | 1 | 0 | 0 | 0 | 0 |

| -4 | -1 | 0 | 1 | 1 | 0 | 0 | 0 |

| 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

Entropy encoding

After the quantization step, we organize the data in a zigzag fashion. This sorts frequencies from low to high. Since higher frequencies are more likely to round to a 0 value after the quantization step, successive 0s are later efficiently compressed by Run Length Encoding (RLE).

for i in range(16):

if i % 2 == 0:

p = min(i, 7)

q = i - p

else:

q = min(i, 7)

p = i - q

while p >= 0 and q >= 0 and p < 8 and q < 8:

print('B[{},{}]'.format(p, q), end=' ')

if i % 2 == 0:

p -= 1

q += 1

else:

p += 1

q -= 1

print()

B[0,0]

B[0,1] B[1,0]

B[2,0] B[1,1] B[0,2]

B[0,3] B[1,2] B[2,1] B[3,0]

B[4,0] B[3,1] B[2,2] B[1,3] B[0,4]

B[0,5] B[1,4] B[2,3] B[3,2] B[4,1] B[5,0]

B[6,0] B[5,1] B[4,2] B[3,3] B[2,4] B[1,5] B[0,6]

B[0,7] B[1,6] B[2,5] B[3,4] B[4,3] B[5,2] B[6,1] B[7,0]

B[7,1] B[6,2] B[5,3] B[4,4] B[3,5] B[2,6] B[1,7]

B[2,7] B[3,6] B[4,5] B[5,4] B[6,3] B[7,2]

B[7,3] B[6,4] B[5,5] B[4,6] B[3,7]

B[4,7] B[5,6] B[6,5] B[7,4]

B[7,5] B[6,6] B[5,7]

B[6,7] B[7,6]

B[7,7]

def zigzag_jpeg(mat):

ret_val = []

for i in range(16):

if i % 2 == 0:

p = min(i, 7)

q = i - p

else:

q = min(i, 7)

p = i - q

while p >= 0 and q >= 0 and p < 8 and q < 8:

ret_val.append(mat[p, q])

if i % 2 == 0:

p -= 1

q += 1

else:

p += 1

q -= 1

return ret_val

zigzag_luma = zigzag_jpeg(quantized_luma_block)

print(zigzag_luma)

[12, 8, 8, 5, -3, 13, 1, -4, 9, -4, 2, -1, -8, 3, 0, 0, -2, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]

Run Length Encoding compression

The zigzag organized data is compressed using Run Length Encoding (RLE) for AC elements (all but the first element B[0, 0]). We store each element with the number of 0s before it: (n_zeros_before, value). If there is only zeros remaining in the list, we place the terminating element (0, 0) early to end the sequence.

def compress_runlength_jpeg(l):

n_zeros = 0

ret_val = [l[0]]

for el in l[1:]:

if el == 0:

n_zeros += 1

else:

ret_val.append((n_zeros, el))

n_zeros = 0

ret_val.append((0, 0))

return ret_val

runlength_luma = compress_runlength_jpeg(zigzag_luma)

print(runlength_luma)

[12, (0, 8), (0, 8), (0, 5), (0, -3), (0, 13), (0, 1), (0, -4), (0, 9), (0, -4), (0, 2), (0, -1), (0, -8), (0, 3), (2, -2), (0, 1), (6, 1), (6, 1), (0, 0)]

Huffman coding

This step is not implemented yet!

To further compress the bitstream, Huffman coding is used. We are not providing the method here. You can find more info there: https://impulseadventure.com/photo/jpeg-huffman-coding.html

Decompression

To decompress the image, all previous steps are done in reverse.

Entropy decoding

Huffman decoding

This step is not implemented yet!

See: https://impulseadventure.com/photo/jpeg-huffman-coding.html

RLE decompression

Since the length of the sequence is variable for an 8x8 block, we also need to return the first index of the next sequence.

def uncompress_runlength_jpeg(l):

ret_val = [l[0]]

n_el = 1

idx_next_frame = 1

for n_zeros, el in l[1:]:

if n_zeros == 0 and el == 0:

# Termination of the sequence, fill remaining values with 0

ret_val += (64 - n_el) * [0]

break

else:

# Append the number of zeros

ret_val += n_zeros * [0]

ret_val.append(el)

n_el += n_zeros + 1

idx_next_frame += 1

return ret_val, idx_next_frame + 1

zigzag_luma_un, next_frame_luma = uncompress_runlength_jpeg(runlength_luma[0:])

print(zigzag_luma_un)

[12, 8, 8, 5, -3, 13, 1, -4, 9, -4, 2, -1, -8, 3, 0, 0, -2, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]

Restoring 8x8 blocks from zigzag represented elements

def unzigzag_jpeg(l):

ret_val = np.zeros((8, 8), dtype=np.int16)

idx = 0

for i in range(16):

if i % 2 == 0:

p = min(i, 7)

q = i - p

else:

q = min(i, 7)

p = i - q

while p >= 0 and q >= 0 and p < 8 and q < 8:

ret_val[p, q] = l[idx]

idx += 1

if i % 2 == 0:

p -= 1

q += 1

else:

p += 1

q -= 1

return ret_val

quantized_luma_block_un = unzigzag_jpeg(zigzag_luma_un)

display_matrix(quantized_luma_block_un)

| 12 | 8 | 13 | 1 | 0 | 0 | 0 | 0 |

| 8 | -3 | -4 | 3 | -2 | 0 | 0 | 0 |

| 5 | 9 | -8 | 1 | 0 | 0 | 0 | 0 |

| -4 | -1 | 0 | 1 | 1 | 0 | 0 | 0 |

| 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

Reversing the quantization

we can revert the quantization step knowing which quantization matrix is used. The quantization matrix is provided in the JFIF file.

Due to rounding errors and aliasing, the reconstructed block won’t be exactly the same as the original one (lossy compression).

dct_luma_block_un = quantized_luma_block_un * quantization_luma

display_matrix(dct_luma_block_un)

| 192 | 88 | 130 | 16 | 0 | 0 | 0 | 0 |

| 96 | -36 | -56 | 57 | -52 | 0 | 0 | 0 |

| 70 | 117 | -128 | 24 | 0 | 0 | 0 | 0 |

| -56 | -17 | 0 | 29 | 51 | 0 | 0 | 0 |

| 36 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

Inversing the Discrete Cosine Transform

We perform the inverse DCT:

\[f(x, y) = \frac{1}{4}\left[ \sum_{u=0}^{7}\sum_{v=0}^{7} C(u) \cdot C(v) \cdot F(u, v) \cdot \cos \frac{(2x + 1)u\pi}{16} \cdot \cos \frac{(2y + 1)v\pi}{16} \right]\]# Detailed implementation (not optimized and slow!):

def idct2(img_dct):

img = np.zeros(img_dct.shape)

for x in range(8):

for y in range(8):

for u in range(8):

C_u = 1 if u > 0 else 1/np.sqrt(2)

for v in range(8):

C_v = 1 if v > 0 else 1/np.sqrt(2)

img[y, x] += (

img_dct[v, u] *

C_u * C_v *

np.cos((2*x + 1) * u * np.pi / 16) *

np.cos((2*y + 1) * v * np.pi / 16)

)

img[y, x] *= 1/4

return img

# Using Scipy built-in functions, much more efficient

# from: https://inst.eecs.berkeley.edu/~ee123/sp16/Sections/JPEG_DCT_Demo.html

def idct2(a):

return scipy.fftpack.idct(

scipy.fftpack.idct( a, axis=0 , norm='ortho'),

axis=1 , norm='ortho')

shifted_luma_block_un = idct2(dct_luma_block_un)

display_matrix_float(shifted_luma_block_un)

| 81.29 | 61.68 | 46.60 | 55.72 | 75.46 | 68.68 | 22.50 | -25.17 |

| 62.68 | 63.01 | 45.93 | 26.99 | 36.44 | 54.12 | 35.66 | -2.61 |

| 49.89 | 61.03 | 38.00 | -5.46 | -5.35 | 36.70 | 55.68 | 40.74 |

| 51.15 | 43.49 | 11.47 | -26.86 | -27.03 | 15.77 | 60.00 | 79.05 |

| 53.07 | 12.86 | -24.97 | -37.55 | -34.47 | -14.43 | 32.36 | 79.19 |

| 54.17 | 2.51 | -31.63 | -28.99 | -31.32 | -37.93 | -6.24 | 44.44 |

| 66.62 | 36.16 | 13.99 | 6.39 | -12.54 | -33.54 | -21.46 | 9.92 |

| 83.86 | 80.69 | 69.20 | 43.62 | 9.25 | -14.67 | -15.22 | -4.57 |

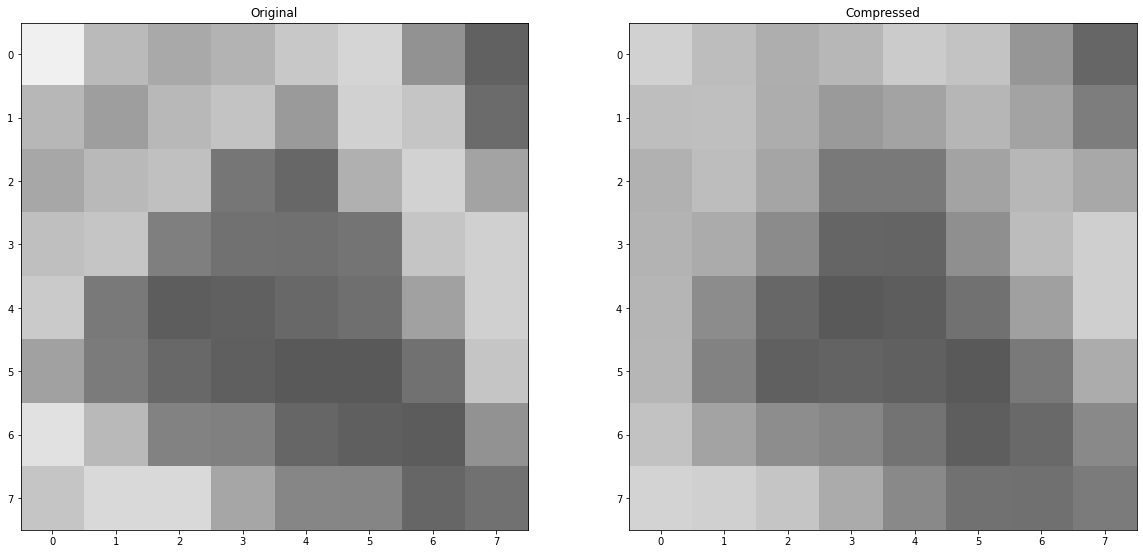

Translating values back to 0..255

Values provided to the DCT were centered at zero. After performing the inverse DCT, we need to shift back the values from 0 to 255 by adding 128.

luma_block_un = np.array(shifted_luma_block_un + 128 * np.ones((8, 8)), dtype=np.uint8)

#display(Markdown('Decompressed data'))

#display_matrix(luma_block_un)

plt.subplot(121)

plt.title('Original')

plt.imshow(luma_block, cmap='gray', vmin=0, vmax=255)

plt.subplot(122)

plt.title('Compressed')

plt.imshow(luma_block_un, cmap='gray', vmin=0, vmax=255)

plt.show()

Rescaling the chroma

Chroma channels were downsampled. We rescale the chroma channels (CbCr) to match the resolution of the luma channel (Y’).

Retrieving RGB values from Y’CbCr

The reverse transformation is defined as:

\[\begin{align} R' &=& Y' & & & + 1.402 & \cdot (C_R-128) \\ G' &=& Y' & - 0.344136 & \cdot (C_B-128)& - 0.714136 & \cdot (C_R-128) \\ B' &=& Y' & + 1.772 & \cdot (C_B-128)& \end{align}\]def ycbcr_to_rgb_jpeg(ycbcr):

r = ycbcr[0] + 1.402 * (ycbcr[2] - 128)

g = ycbcr[0] - 0.344136 * (ycbcr[1] - 128) - 0.714136 * (ycbcr[2] - 128)

b = ycbcr[0] + 1.772 * (ycbcr[1] - 128)

return [int(min(max(r, 0), 255)), int(min(max(g, 0), 255)), int(min(max(b, 0), 255))]

The full algorithm

Compression

def compress_jpeg(image, quality, downsampling_cbcr):

# Conversion from RGB to Y'CbCr

image_ycbcr = image_rgb_to_ycbcr_jpeg(image)

image_y = image_ycbcr[:, :, 0]

# Reduce resolution of chroma channels

image_cb = block_reduce(image_ycbcr[:, :, 1], block_size=(downsampling_cbcr, downsampling_cbcr), func=np.mean)

image_cr = block_reduce(image_ycbcr[:, :, 2], block_size=(downsampling_cbcr, downsampling_cbcr), func=np.mean)

# Run DCT and quantization on each 8x8 block

c_image_y = np.zeros(image_y.shape , dtype=np.int16)

c_image_cb = np.zeros(image_cb.shape, dtype=np.int16)

c_image_cr = np.zeros(image_cr.shape, dtype=np.int16)

# Final compressed strings

str_image_y = []

str_image_cb = []

str_image_cr = []

quantization_luma = get_quantization_table_luma(quality)

quantization_chroma = get_quantization_table_chroma(quality)

centering_matrix = 128*np.ones((8, 8), dtype=np.int16)

for i in np.r_[:c_image_y.shape[0]:8]:

for j in np.r_[:c_image_y.shape[1]:8]:

c_image_y[i:i+8, j:j+8] = dct2(image_y[i:i+8, j:j+8] - centering_matrix) / quantization_luma

zigzag_image_y = zigzag_jpeg(c_image_y[i:i+8, j:j+8])

str_image_y += compress_runlength_jpeg(zigzag_image_y)

for i in np.r_[:c_image_cb.shape[0]:8]:

for j in np.r_[:c_image_cb.shape[1]:8]:

c_image_cb[i:i+8, j:j+8] = dct2(image_cb[i:i+8, j:j+8] - centering_matrix) / quantization_chroma

zigzag_image_cb = zigzag_jpeg(c_image_cb[i:i+8, j:j+8])

str_image_cb += compress_runlength_jpeg(zigzag_image_cb)

for i in np.r_[:c_image_cr.shape[0]:8]:

for j in np.r_[:c_image_cr.shape[1]:8]:

c_image_cr[i:i+8, j:j+8] = dct2(image_cr[i:i+8, j:j+8] - centering_matrix) / quantization_chroma

zigzag_image_cr = zigzag_jpeg(c_image_cr[i:i+8, j:j+8])

str_image_cr += compress_runlength_jpeg(zigzag_image_cr)

return str_image_y, str_image_cb, str_image_cr

Decompression

import scipy.ndimage

def uncompress_jpeg(

str_image_y, str_image_cb, str_image_cr,

width, height,

quantization_luma, quantization_chroma,

downsampling_cbcr):

image_rgb = np.zeros((width, height, 3), dtype=np.uint8)

image_y = np.zeros((width, height))

image_cb = np.zeros((width, height))

image_cr = np.zeros((width, height))

c_image_cb = np.zeros((width//downsampling_cbcr, height//downsampling_cbcr))

c_image_cr = np.zeros((width//downsampling_cbcr, height//downsampling_cbcr))

next_frame_y = 0

centering_matrix = 128*np.ones((8, 8), dtype=np.int16)

# Restore original values for luma

for i in np.r_[:height: 8]:

for j in np.r_[:width: 8]:

uncompressed_y, nf = uncompress_runlength_jpeg(str_image_y[next_frame_y:])

next_frame_y += nf

image_y[i:i+8, j:j+8] = unzigzag_jpeg(uncompressed_y)

image_y[i:i+8, j:j+8] = quantization_luma * image_y[i:i+8, j:j+8]

image_y[i:i+8, j:j+8] = idct2(image_y[i:i+8, j:j+8]) + centering_matrix

# Restore values for chroma

next_frame_cb = 0

next_frame_cr = 0

for i in np.r_[:height//downsampling_cbcr: 8]:

for j in np.r_[:width//downsampling_cbcr: 8]:

uncompressed_cb, nf_cb = uncompress_runlength_jpeg(str_image_cb[next_frame_cb:])

next_frame_cb += nf_cb

c_image_cb[i:i+8, j:j+8] = unzigzag_jpeg(uncompressed_cb)

c_image_cb[i:i+8, j:j+8] = quantization_chroma * c_image_cb[i:i+8, j:j+8]

c_image_cb[i:i+8, j:j+8] = idct2(c_image_cb[i:i+8, j:j+8]) + centering_matrix

uncompressed_cr, nf_cr = uncompress_runlength_jpeg(str_image_cr[next_frame_cr:])

next_frame_cr += nf_cr

c_image_cr[i:i+8, j:j+8] = unzigzag_jpeg(uncompressed_cr)

c_image_cr[i:i+8, j:j+8] = quantization_chroma * c_image_cr[i:i+8, j:j+8]

c_image_cr[i:i+8, j:j+8] = idct2(c_image_cr[i:i+8, j:j+8]) + centering_matrix

# Rescale the chroma channels

image_cb = scipy.ndimage.zoom(c_image_cb, downsampling_cbcr)

image_cr = scipy.ndimage.zoom(c_image_cr, downsampling_cbcr)

# Convert to RGB

image_rgb = image_ycbcr_to_rgb_jpeg(np.dstack((image_y, image_cb, image_cr)))

return image_rgb

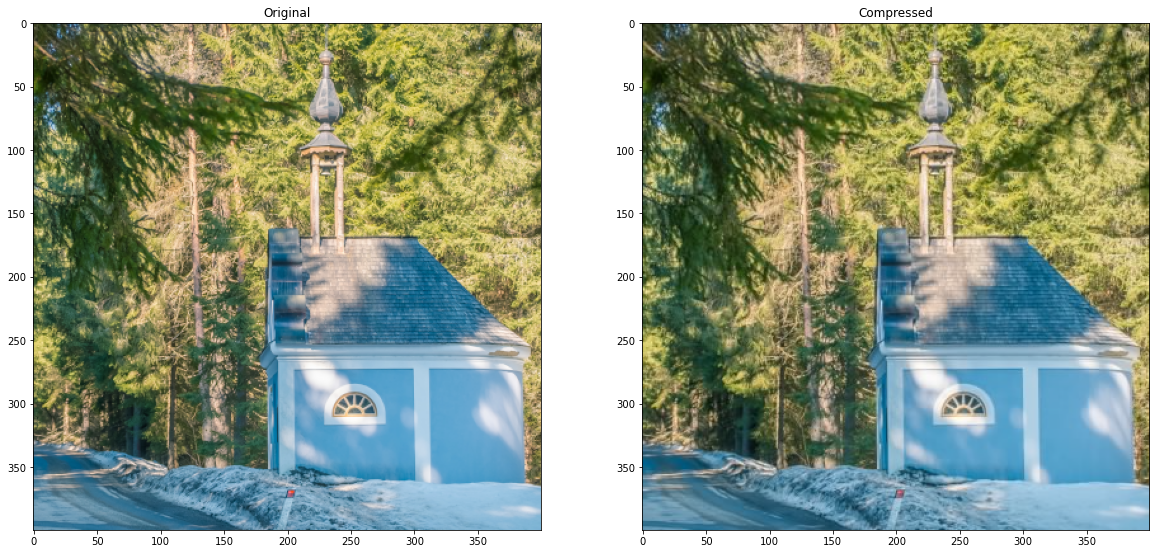

Example on a full image

We first gather metadata from the original pictures (width and height) and set the quality factor.

quality = 90

height, width, _ = image.shape

downsampling_cbcr = 2

Now, we can compress our image. We get strings representing the compressed image.

str_image_y, str_image_cb, str_image_cr = compress_jpeg(image, quality, downsampling_cbcr)

print('Raw size:')

print(str(3 * width * height / 1024) + 'KiB')

print('Compressed size (approx):')

print(str((len(str_image_y) + len(str_image_cb) + len(str_image_cr)) * 2 / 1024) + 'KiB')

Raw size:

468.75KiB

Compressed size (approx):

158.138671875KiB

We can decompress the image from number strings given width, height, quantization tables and chroma downsampling factor, all provided in the JFIF file.

quantization_luma = get_quantization_table_luma(quality)

quantization_chroma = get_quantization_table_chroma(quality)

image_decompressed = uncompress_jpeg(

str_image_y, str_image_cb, str_image_cr,

width, height,

quantization_luma, quantization_chroma,

downsampling_cbcr)

plt.subplot(121)

plt.title('Original')

plt.imshow(image)

plt.subplot(122)

plt.title('Compressed')

plt.imshow(image_decompressed)

plt.show()

result = Image.fromarray(image_decompressed.astype(np.uint8))

result.save('compressed_uncompressed.png')